A | B | C | D | E | F | G | H | CH | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

A Hopfield network (Ising model of a neural network or Ising–Lenz–Little model or Amari-Little-Hopfield network) is a spin glass system used to model neural networks, based on Ernst Ising's work with Wilhelm Lenz on the Ising model of magnetic materials.[1] Hopfield networks were first described with respect to recurrent neural networks by Shun'ichi Amari in 1972[2][3] and with respect to biological neural networks by William Little in 1974,[4] and were popularised by John Hopfield in 1982.[5] Hopfield networks serve as content-addressable ("associative") memory systems with binary threshold nodes, or with continuous variables.[6] Hopfield networks also provide a model for understanding human memory.[7][8]

History

The Ising model itself was published in 1920s as a model of magnetism, however it studied at the thermal equilibrium, which does not change with time. Glauber in 1963 studied the Ising model evolving in time, as a process towards thermal equilibrium (Glauber dynamics).[9]

The Ising model learning memory model was first proposed by Shun'ichi Amari in 1972[2] and then by William A. Little in 1974,[4] who was acknowledged by Hopfield in his 1982 paper. The Sherrington–Kirkpatrick model of spin glass, published in 1975, is the Hopfield network with random initialization. Sherrington and Kirkpatrick found that it is highly likely for the energy function of the SK model to have many local minima.[5]

Networks with continuous dynamics were developed by Hopfield in his 1984 paper.[6] A major advance in memory storage capacity was developed by Krotov and Hopfield in 2016[10] through a change in network dynamics and energy function. This idea was further extended by Demircigil and collaborators in 2017.[11] The continuous dynamics of large memory capacity models was developed in a series of papers between 2016 and 2020.[10][12][13] Large memory storage capacity Hopfield Networks are now called Dense Associative Memories or modern Hopfield networks.

Structure

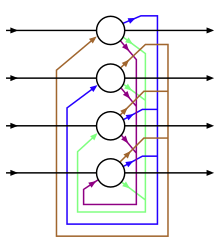

The units in Hopfield nets are binary threshold units, i.e. the units only take on two different values for their states, and the value is determined by whether or not the unit's input exceeds its threshold . Discrete Hopfield nets describe relationships between binary (firing or not-firing) neurons .[5] At a certain time, the state of the neural net is described by a vector , which records which neurons are firing in a binary word of bits.

The interactions between neurons have units that usually take on values of 1 or −1, and this convention will be used throughout this article. However, other literature might use units that take values of 0 and 1. These interactions are "learned" via Hebb's law of association, such that, for a certain state and distinct nodes

but .

(Note that the Hebbian learning rule takes the form when the units assume values in .)

Once the network is trained, no longer evolve. If a new state of neurons is introduced to the neural network, the net acts on neurons such that

- if

- if

where is the threshold value of the i'th neuron (often taken to be 0).[14] In this way, Hopfield networks have the ability to "remember" states stored in the interaction matrix, because if a new state is subjected to the interaction matrix, each neuron will change until it matches the original state (see the Updates section below).

The connections in a Hopfield net typically have the following restrictions:

- (no unit has a connection with itself)

- (connections are symmetric)

The constraint that weights are symmetric guarantees that the energy function decreases monotonically while following the activation rules.[15] A network with asymmetric weights may exhibit some periodic or chaotic behaviour; however, Hopfield found that this behavior is confined to relatively small parts of the phase space and does not impair the network's ability to act as a content-addressable associative memory system.

Hopfield also modeled neural nets for continuous values, in which the electric output of each neuron is not binary but some value between 0 and 1.[6] He found that this type of network was also able to store and reproduce memorized states.

Notice that every pair of units i and j in a Hopfield network has a connection that is described by the connectivity weight . In this sense, the Hopfield network can be formally described as a complete undirected graph , where is a set of McCulloch–Pitts neurons and is a function that links pairs of units to a real value, the connectivity weight.

Updating

Updating one unit (node in the graph simulating the artificial neuron) in the Hopfield network is performed using the following rule:

Antropológia

Aplikované vedy

Bibliometria

Dejiny vedy

Encyklopédie

Filozofia vedy

Forenzné vedy

Humanitné vedy

Knižničná veda

Kryogenika

Kryptológia

Kulturológia

Literárna veda

Medzidisciplinárne oblasti

Metódy kvantitatívnej analýzy

Metavedy

Metodika

Text je dostupný za podmienok Creative

Commons Attribution/Share-Alike License 3.0 Unported; prípadne za ďalších

podmienok.

Podrobnejšie informácie nájdete na stránke Podmienky

použitia.

www.astronomia.sk | www.biologia.sk | www.botanika.sk | www.dejiny.sk | www.economy.sk | www.elektrotechnika.sk | www.estetika.sk | www.farmakologia.sk | www.filozofia.sk | Fyzika | www.futurologia.sk | www.genetika.sk | www.chemia.sk | www.lingvistika.sk | www.politologia.sk | www.psychologia.sk | www.sexuologia.sk | www.sociologia.sk | www.veda.sk I www.zoologia.sk